Flowman 1.1.0 released

We are happy to announce the release of Flowman 1.1.0. This release contains many small improvements and bugfixes. Flowman now finally supports Spark 3.4.1. Major

Flowman is a declarative ETL framework and data build tool powered by Apache Spark. It reads, processes and writes data from and to a huge variety of physical storages, like relational databases, files, and object stores. It can easily join data sets from different source systems for creating an integrated data model. This makes Flowman a powerful tool for creating complex data transformation pipelines for the modern data stack.

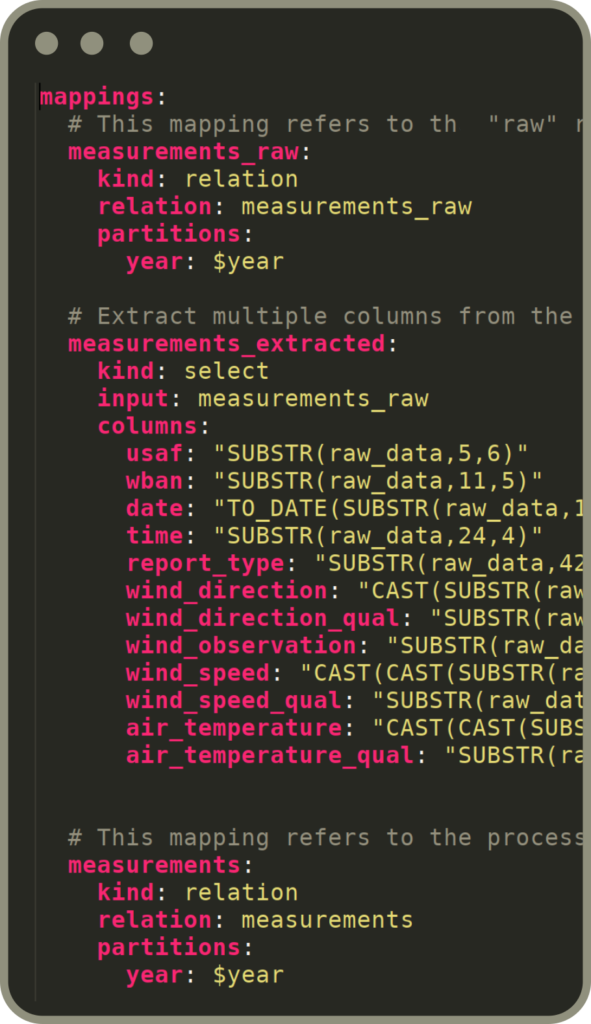

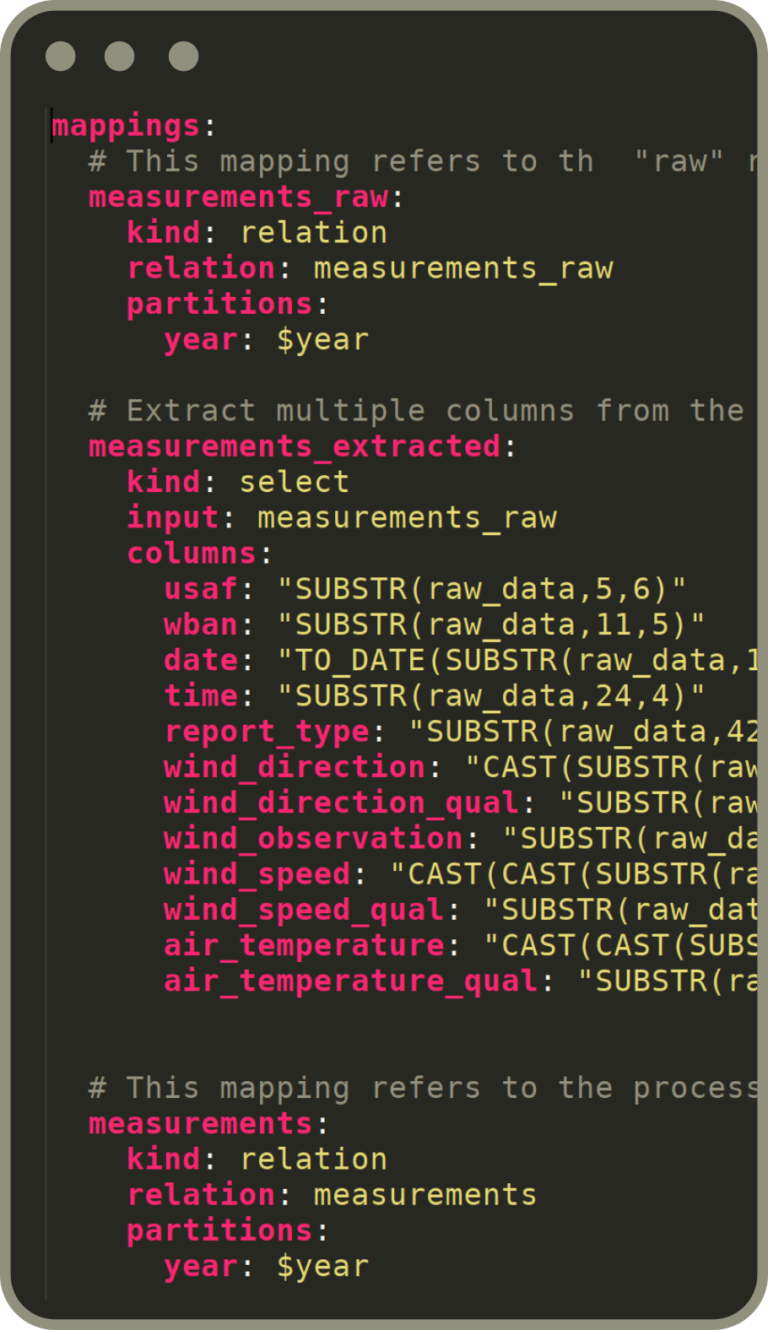

For defining all data sources, sinks and the transformations between them, Flowman follows a purely declarative approach using plain YAML files. Developers can focus on the business logic, while Flowman takes care of executing the data flow and managing the data models.

Being built on top of Apache Spark, Flowman can process both small amounts of data on a local machine and scale out to large clusters of multiple machines (Hadoop, Kubernetes, AWS EMR and Azure Synapse) for processing terabytes of data.

Lightweight specification of data models, transformations and build targets using declarative syntax instead of complex application code.

Modern development methodology following the "everything is code" approach supporting collaboration via arbitrary VCS. Support for self contained unittest, automatic documentation and data quality checks.

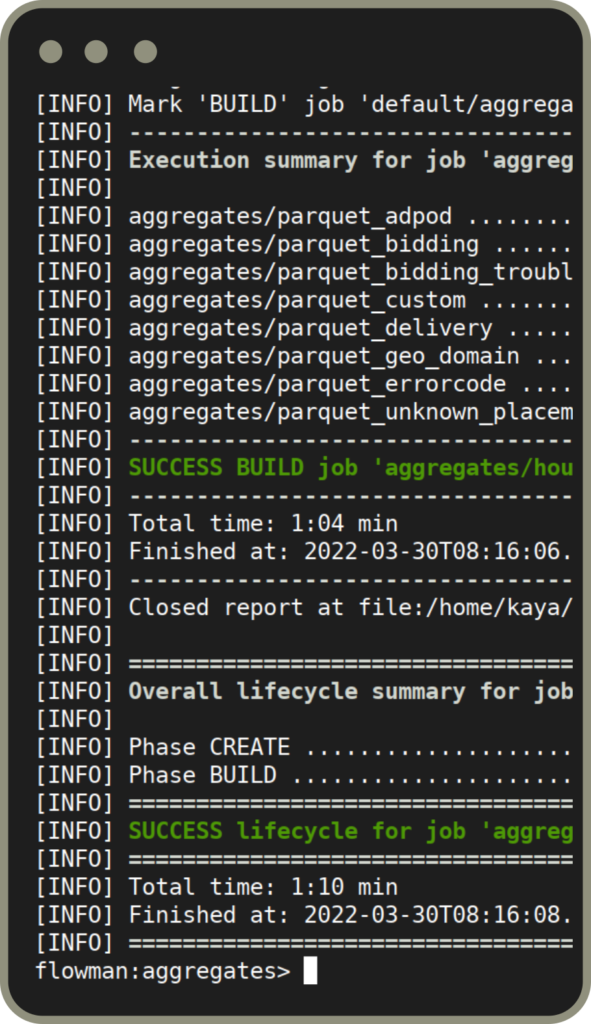

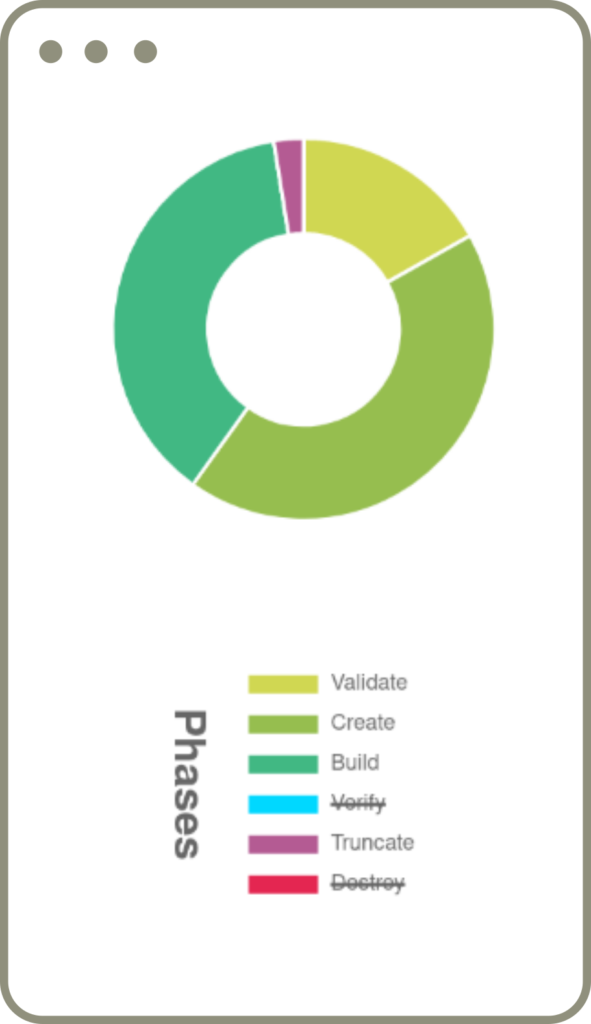

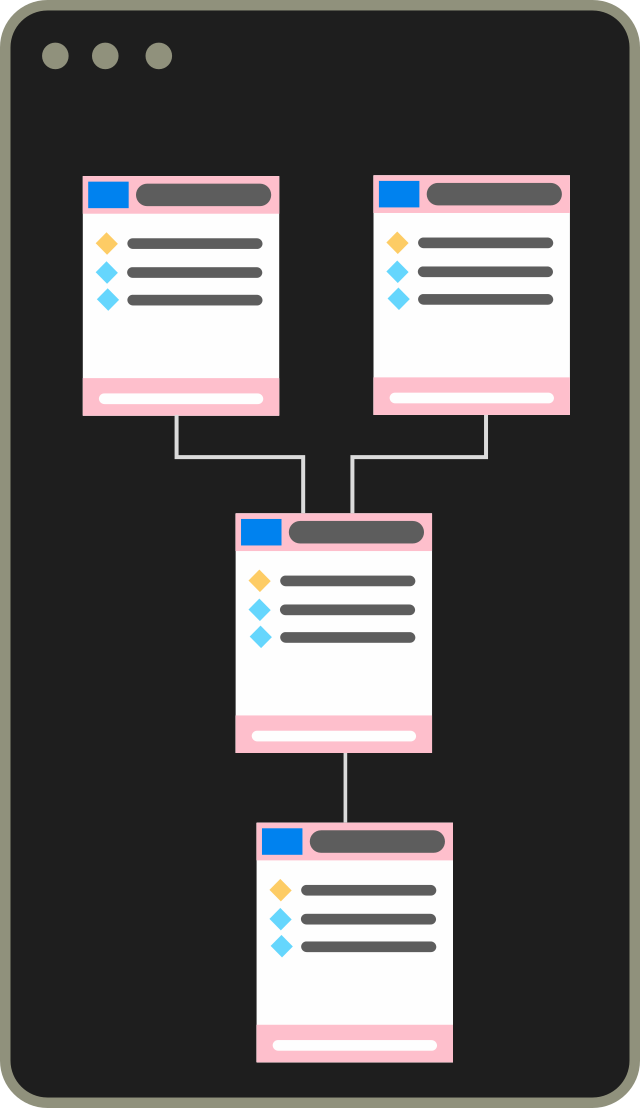

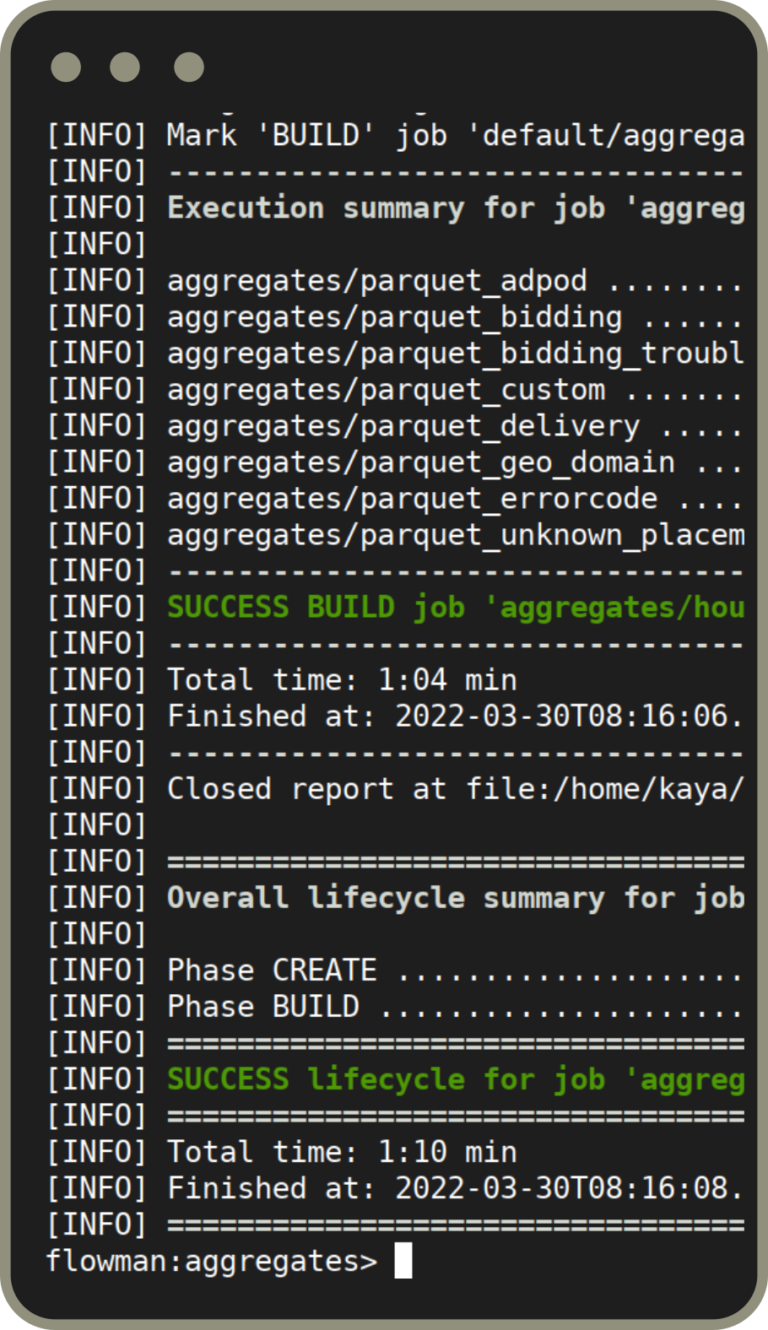

Full lifecycle management of your data models, including creating target tables, automatic migration, and possibly final removal. Automatic documentation of data flows including lineage and quality checks. Job history server. Business defined execution metrics.

Implement complex transformations step by step, create self-contained unit tests at any point in the chain of transformations. Flowman will execute the whole flow without sacrificing performance by applying end-to-end query optimizations.

Design all details of your data models, grow and evolve them over time. Add descriptions and expectations to columns. Flowman will create the physical model including descriptions and automatically migrate existing tables to the desired state.

Implement complex transformations step by step, create self-contained unit tests at any point in the chain of transformations. Flowman will take care of executing the whole flow without sacrificing performance by applying end-to-end query optimizations.

Implement self-contained unit tests for your business logic by mocking the real data sources. Add data quality checks before and after pipeline execution. Collect meaningful execution metrics including data quality.

We are happy to announce the release of Flowman 1.1.0. This release contains many small improvements and bugfixes. Flowman now finally supports Spark 3.4.1. Major

Don’t reinvent the wheel by writing more boilerplate code. Focus on critical business logic and delegate the tricky details to a clever tool. Introduction Apache

smartclip is a successful and growing company specialized for online video advertisement. More importantly, smartclip was one of the first companies implementing Flowman for their

Flowman version 1.0.0 has finally arrived. For several years, multiple companies are using Flowman in production as a robust and reliable solution for efficiently building

We are excited and proud to announce the official release of Flowman 1.0. Flowman is a tool for performing complex data transformations in a structured